Our research goal is to bridge the gap between what robotic manipulators can do now and what they are capable of doing.

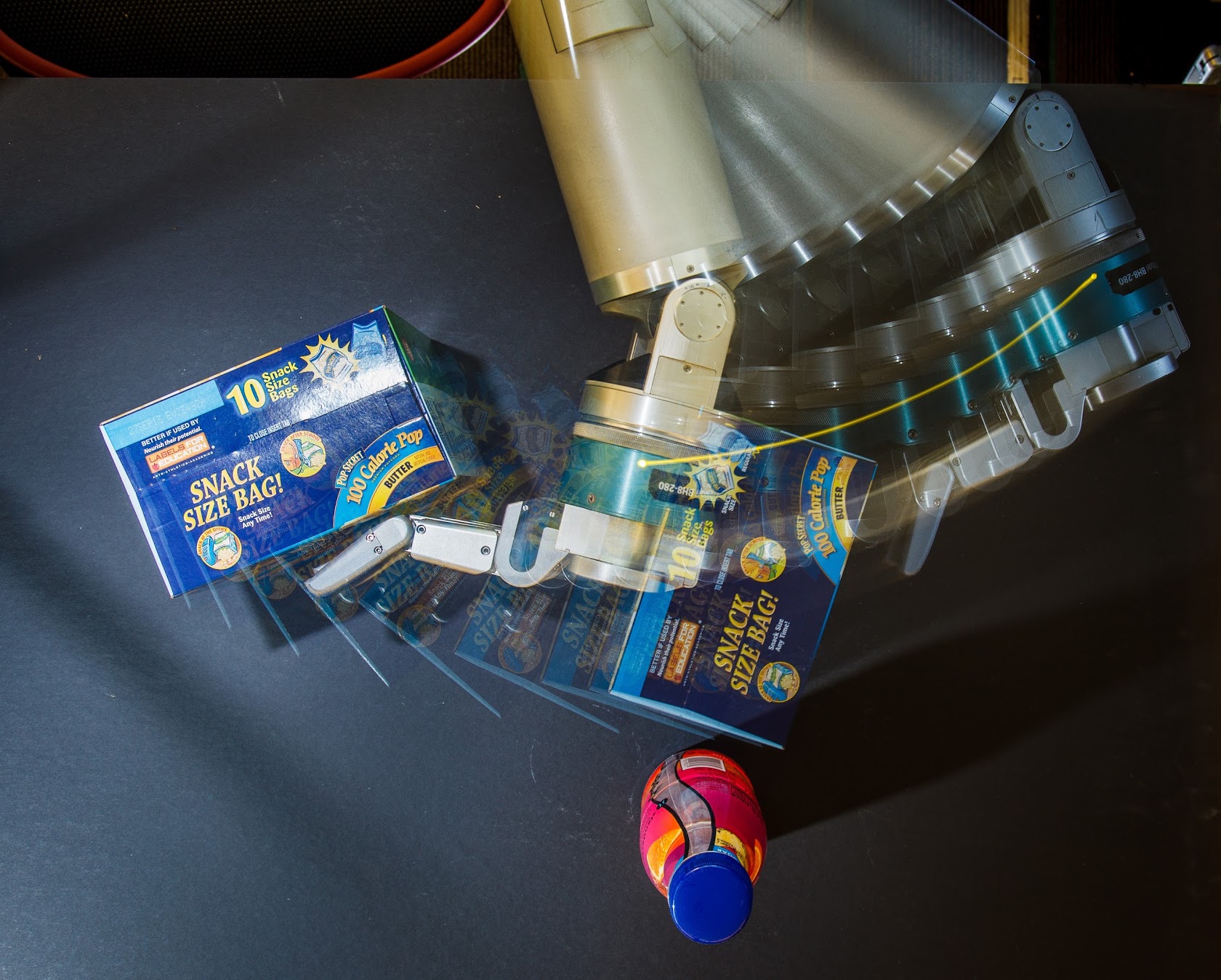

Our research thrust on nonprehensile physics-based manipulation has produced simple but effective models, integrated with proprioception and perception, that have enabled robots to fearlessly push, pull, and slide objects, and reconfigure clutter that comes in the way of their primary task. Our work has made fundamental contributions to motion planning, trajectory optimization, state estimation, and information gathering for manipulation.

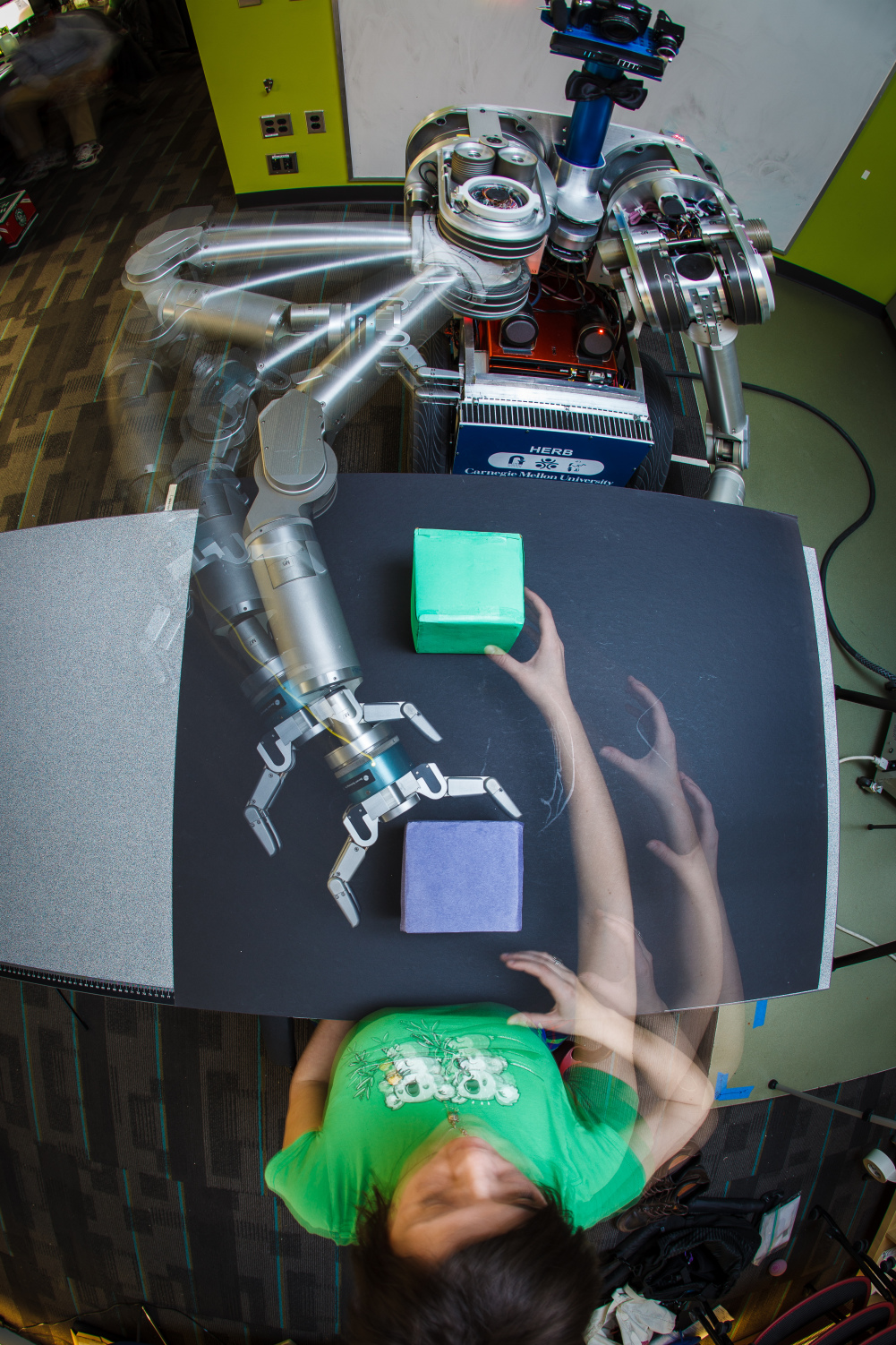

Our research thrust on the mathematics of human-robot interaction has produced a framework for collaboration using Bayesian inference to model the human collaborator, and trajectory optimization to generate fluent collaborative plans. Our work has made fundamental contributions to human-robot handovers, shared autonomy, the expressiveness of robot motion, and game-theoretic models of human-robot collaboration.

Common to all of our research is a unifying philosophy: We build mathematical models of physical behavior. Using these models we have transferred behaviors from humans to robots, and across robots.

We also passionate about building end-to-end systems (HERB, ADA, HRP3, CHIMP, Andy, among others) that integrate perception, planning, and control in the real world. Understanding the interplay between system components has helped us produce state-of-the-art algorithms for object recognition and pose estimation (MOPED), and dense 3D modeling (CHISEL, now used by Google Project Tango).

Much of our work is open source and available on GitHub.